What Is A Loss Function In Machine Learning

Overview of Loss Functions in Machine Learning

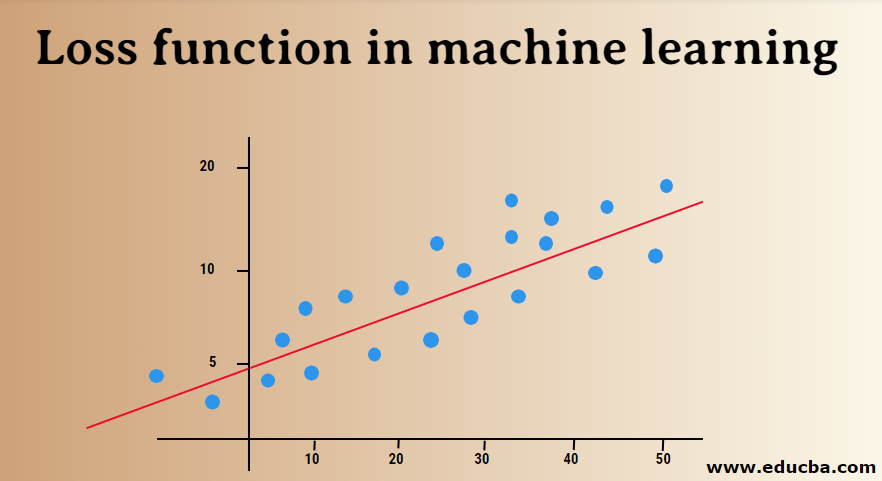

In Machine learning, the loss office is determined as the deviation between the actual output and the predicted output from the model for the single training example while the average of the loss function for all the training examples is termed as the cost part. This computed difference from the loss functions( such equally Regression Loss, Binary Classification, and Multiclass Nomenclature loss role) is termed the error value; this error value is directly proportional to the bodily and predicted value.

How does Loss Functions Work?

The discussion 'Loss' states the penalty for failing to achieve the expected output. If the deviation in the predicted value than the expected value by our model is large, so the loss function gives the higher number as output, and if the difference is small-scale & much closer to the expected value, it outputs a smaller number.

Hither'southward an example of when we are trying to predict business firm sales price in metro cities.

| Predicted Sales Price (In lakh) | Bodily Sales Price(In lakh) | Deviation (Loss) |

| Bangalore: 45 | 0 (All predictions are correct) | |

| Pune: 35 | ||

| Chennai: 40 | ||

| Bangalore: 40 | Bangalore: 45 | 5 lakh for Bangalore, 2 lakh for Chennai |

| Pune: 35 | Pune: 35 | |

| Chennai: 38 | Chennai: xl | |

| Bangalore: 43 | 2 lakh for Bangalore, 5 lakh for, Pune2 lakh for Chennai, | |

| Pune: xxx | ||

| Chennai: 45 |

It is important to notation that, amount of deviation doesn't matter; the affair which matters here is whether the value predicted by our model is right or wrong. Loss functions are different based on your problem statement to which machine learning is existence applied. The price function is another term used interchangeably for the loss function, simply it holds a slightly dissimilar meaning. A loss function is for a single training case, while a cost function is an boilerplate loss over the consummate railroad train dataset.

Types of Loss Functions in Machine Learning

Beneath are the dissimilar types of the loss function in machine learning which are equally follows:

one. Regression loss functions

Linear regression is a fundamental concept of this function. Regression loss functions establish a linear relationship betwixt a dependent variable (Y) and an independent variable (X); hence we try to fit the best line in infinite on these variables.

Y = X0 + X1 + X2 + X3 + X4….+ Xn

- X = Independent variables

- Y = Dependent variable

Mean Squared Error Loss

MSE(L2 error) measures the boilerplate squared departure between the actual and predicted values by the model. The output is a single number associated with a set of values. Our aim is to reduce MSE to ameliorate the accurateness of the model.

Consider the linear equation, y = mx + c, we tin derive MSE as:

MSE=1/N ∑i=1 to north (y(i)−(mx(i)+b))two

Here, Northward is the total number of information points, ane/Northward ∑i=1 to n is the hateful value, and y(i) is the actual value and mx(i)+b its predicted value.

Mean Squared Logarithmic Error Loss (MSLE)

MSLE measures the ratio betwixt bodily and predicted value. It introduces an disproportion in the error curve. MSLE only cares nigh the percentual difference betwixt actual and predicted values. It can be a good choice equally a loss function when we want to predict house sales prices, baker sales prices, and the data is continuous.

Hither, the loss tin can be calculated as the mean of observed data of the squared differences betwixt the log-transformed actual and predicted values, which can be given as:

L=1nn∑i=one(log(y(i)+one)−log(^y(i)+1))ii

Mean Absolute Error (MAE)

MAE calculates the sum of absolute differences between actual and predicted variables. That means it measures the average magnitude of errors in a gear up of predicted values. Using the mean square fault is easier to solve, but using the accented error is more robust to outliers. Outliers are those values, which deviate extremely from other observed data points.

MAE can be calculated as:

L=1nn∑i=1∣∣y(i)−^y(i)∣∣

ii. Binary Classification Loss Functions

These loss functions are made to measure out the performances of the nomenclature model. In this, data points are assigned one of the labels, i.e. either 0 or 1. Farther, they can be classified equally:

Binary Cross-Entropy

It's a default loss part for binary classification problems. Cross-entropy loss calculates the operation of a nomenclature model, which gives an output of a probability value betwixt 0 and i. Cross-entropy loss increases as the predicted probability value deviate from the actual characterization.

Hinge loss

Hinge loss can exist used equally an culling to cross-entropy, which was initially developed to use with a support vector machine algorithm. Swivel loss works best with the classification trouble because target values are in the set of {-1,i}. Information technology allows to assign more error when there is a difference in sign between actual and predicted values. Hence resulting in better performance than cross-entropy.

Squared Swivel loss

An extension of hinge loss, which only calculates the square of the hinge loss score. It reduces the mistake function and makes information technology numerically easier to work with. It finds the classification boundary that specifies the maximum margin between the data points of various classes. Squared swivel loss fits perfect for YES OR NO kind of decision problems, where probability divergence is not the concern.

3. Multi-class Classification Loss Functions

Multi-class nomenclature is the predictive models in which the data points are assigned to more than two classes. Each class is assigned a unique value from 0 to (Number_of_classes – ane). Information technology is highly recommended for paradigm or text classification issues, where a single paper can have multiple topics.

Multi-form Cross-Entropy

In this case, the target values are in the set up of 0 to due north i.e {0,1,2,3…n}. Information technology calculates a score that takes an average divergence betwixt actual and predicted probability values, and the score is minimized to attain the all-time possible accuracy. Multi-class cross-entropy is the default loss function for text nomenclature problems.

Sparse Multi-course Cross-Entropy

I hot encoding process makes multi-grade cantankerous-entropy difficult to handle a large number of information points. Sparse cantankerous-entropy solves this problem by performing the calculation of error without using one-hot encoding.

Kullback Leibler Difference Loss

KL divergence loss calculates the departure betwixt probability distribution and baseline distribution and finds out how much information is lost in terms of $.25. The output is a not-negative value that specifies how close 2 probability distributions are. To draw KL divergence in terms of probabilistic view, the likelihood ratio is used.

In this article, initially, we understood how loss functions work and then we went on to explore a comprehensive list of loss functions with used example examples. Even so, understanding it practically is more than beneficial, so endeavour to read more and implement it. Information technology will clarify your doubts thoroughly.

Recommended Articles

This is a guide to Loss Functions in Machine Learning. Here we discuss How does Loss Functions Work and the Types of Loss Functions in Automobile Learning in detail. You lot may likewise have a wait at the following articles to learn more –

- Motorcar Learning Methods

- Introduction To Car Learning

- Big Information Technologies

- Learn the Catogories of Hyperparameter

- Motorcar Learning Life Cycle | Top viii Stages

What Is A Loss Function In Machine Learning,

Source: https://www.educba.com/loss-functions-in-machine-learning/

Posted by: gogginsmakeles.blogspot.com

0 Response to "What Is A Loss Function In Machine Learning"

Post a Comment